Anomaly Detection – Using Machine Learning to Detect Abnormalities in Time Series Data

This post was co-authored by Vijay K Narayanan, Partner Director of Software Engineering at the Azure Machine Learning team at Microsoft.

Introduction

Anomaly Detection is the problem of finding patterns in data that do not conform to a model of “normal” behavior. Detecting such deviations from expected behavior in temporal data is important for ensuring the normal operations of systems across multiple domains such as economics, biology, computing, finance, ecology and more. Applications in such domains need the ability to detect abnormal behavior which can be an indication of systems failure or malicious activities, and they need to be able to trigger the appropriate steps towards taking corrective actions. In each case, it is important to characterize what is normal, what is deviant or anomalous and how significant is the anomaly. This characterization is straightforward for systems where the behavior can be specified using simple mathematical models – for example, the output of a Gaussian distribution with known mean and standard deviation. However, most interesting real world systems have complex behavior over time. It is necessary to characterize the normal state of the system by observing data about the system over a period of time when the system is deemed normal by observers and users of that system, and to use this characterization as a baseline to flag anomalous behavior.

Machine learning is useful to learn the characteristics of the system from observed data. Common anomaly detection methods on time series data learn the parameters of the data distribution in windows over time and identify anomalies as data points that have a low probability of being generated from that distribution. Another class of methods include sequential hypothesis tests like cumulative sum (CUSUM) charts, sequential probability ratio test (SPRT) etc., which can identify certain types of changes in the distributions of the data in an online manner. All these methods use some predefined thresholds to alert on changes in the values of some characteristic of the distribution and operate on the raw time series values. At their core, all methods test if the sequence of values in a time series is consistent to have been generated from an i.i.d (independent and identically distributed) process.

Exchangeability Martingales

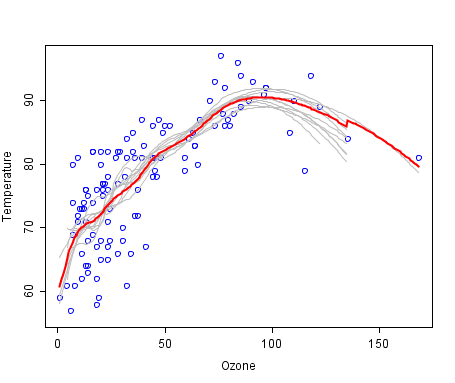

A direct way to detect changes in the distribution of time series values uses exchangeability martingales (EM) to test if the time series values are i.i.d ([3], [4] and [5]). A distribution of time series values is exchangeable if the distribution is invariant to the order of the variables. The basic idea is that an EM remains stable if the data is drawn from the same distribution, while it grows to a large value if the exchangeability assumption is violated.

EM based anomaly scores to detect changes in the distribution of time series values have a few properties that are useful for anomaly detection in dynamic systems.

- Different type of anomalies (e.g. increased dynamic range of values, threshold change in the values, slow trends etc.) can be detected by transforming the raw data to capture strangeness (abnormal behavior) in the domain e.g., an upward trend in the values is probably indicative of a memory leak in a computing context, while it may be expected behavior in the growth rate of a population. When the time series is seasonal or has other predictable patterns, then the strangeness functions can also be defined on the residuals remaining after subtracting a forecast from the observed values.

- Anomalies are computed in an online manner by keeping some of the historical time series in a window.

- Threshold in martingale value for alerting can be used to control false positives. Further, the threshold has the same dynamic range irrespective of the absolute value of the time series or the strangeness function and has a physical interpretation in terms of the expected false positive rate ([3]).

Anomaly Detection Service on Azure Marketplace

We have published an anomaly detection service in the Azure marketplace for intelligent web services. This anomaly detection service can detect the following different types of anomalies on time series data:

- Positive and negative trends: When monitoring memory usage in computing, for instance, an upward trend is indicative of a memory leak,

- Increase in the dynamic range of values: As an example, when monitoring the exceptions thrown by a service, any increases in the dynamic range of values could indicate instability in the health of the service, and

- Spikes and Dips: For instance, when monitoring the number of login failures to a service or number of checkouts in an e-commerce site, spikes or dips could indicate abnormal behavior.

The service provides a REST based API over HTTPS that can be consumed in different ways including a web or mobile application, R, Python, Excel, etc. We have an Azure web application that demonstrates the anomaly detection web service. You can also send your time series data to this service via a REST API call, and it runs a combination of the three anomaly types described above. The service runs on the AzureML Machine Learning platform which scales to your business needs seamlessly and provides SLA’s of 99.9%.

Application to Cloud Service Monitoring

Clusters of commodity compute and storage devices interconnected by networks are routinely used to deliver high quality services for enterprise and consumer applications in a cost effective manner. Real-time operational analytics to monitor, alert and recover from failures in any of the components of the system are necessary to guarantee the SLAs of these services. A naïve approach of alerting using rules, i.e. when KPIs of these components take on anomalous values, could easily lead to a large number of false positive alerts in any service of reasonable size. Further, tuning the thresholds for thousands of KPIs in a dynamic system is non-trivial. EMs are particularly well-suited for detecting and alerting changes in the KPIs of these systems due to the advantages mentioned earlier. The alerts generated by this system are handled by automated healing processes and human systems experts to help the SQL Database service on Azure meet its SLA of 99.99%, the first cloud database to achieve this level of SLA.

Anomaly Detection for Log Analytics

Most log analytics platforms provide an easy way to search through systems logs once a problem has been identified. However, proactive detection of ongoing anomalous behavior is important to be ahead of the curve in managing complex systems. Microsoft and Sumo Logic have been partnering to broaden the machine learning based anomaly detection capabilities for log analytics. The seamless cloud-to-cloud integration between Microsoft AzureML and Sumo Logic provides customers a comprehensive, machine learning solution for detecting and alerting anomalous events in logs. The end user can consume the integrated anomaly detection capabilities easily in their Sumo Logic service with minimal effort, relying on the combined power of proven technologies to monitor and manage complex system deployments.

Vijay K Narayanan, Alok Kirpal, Nikos Karampatziakis

Follow Vijay on twitter.

References

- Intelligent web services on Azure marketplace

- Anomaly detection service on Azure marketplace.

- Vladimir Vovk, Ilia Nouretdinov, Alex J. Gammerman, “Testing Exchangeability Online”, ICML 2003.

- Shen-Shyang Ho; Wechsler, H., “A Martingale Framework for Detecting Changes in Data Streams by Testing Exchangeability,” Pattern Analysis and Machine Intelligence, IEEE Transactions , vol.32, no.12, pp.2113,2127, Dec. 2010

- Valentina Fedorova, Alex J. Gammerman, Ilia Nouretdinov, Vladimir Vovk, “Plug-in martingales for testing exchangeability on-line”, ICML 2012

For more information about MoData offerings click here